Finding a better way to fight cancer doesn’t always mean discovering a new drug or surgical technique. Sometimes just defining the disease in greater detail can make a big difference. A more specific diagnosis may allow a physician to better tailor a patient’s treatment, using available therapies proven to work better on a specific subtype of disease or avoiding unnecessary complications for less aggressive cases.

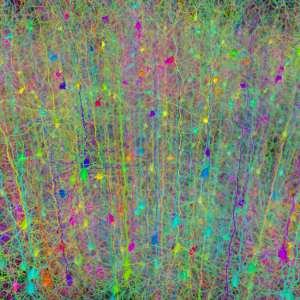

“Finding better ways to stratify kids when they present and decide who needs more therapy and who needs less therapy is one of the ways in which we’ve gotten much better at treating pediatric cancer,” said Samuel Volchenboum, Computation Institute Fellow, Assistant Professor of Pediatrics at Comer Children’s Hospital and Director of the UChicago Center for Research Informatics. “For example, kids can be put in one of several different groups for leukemia, and each group has its own treatment course.”

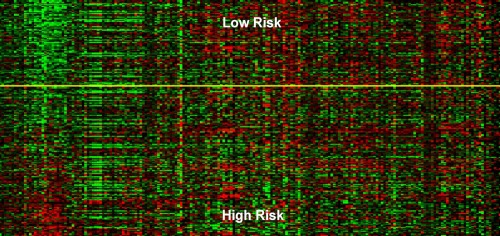

Classically, patients have been sorted into risk or treatment groups based on demographic factors such as age or gender, and relatively simple results from laboratory tests or biopsies. Because cancer is a genetic disease, physicians hope that genetic factors will point the way to even more precise classifications. Yet despite this promise, many of the “genetic signatures” found to correlate with different subtypes of cancer are too complex – involving dozens or hundreds of genes – for clinical use and difficult to validate across patient populations.